Table of Contents

Introduction

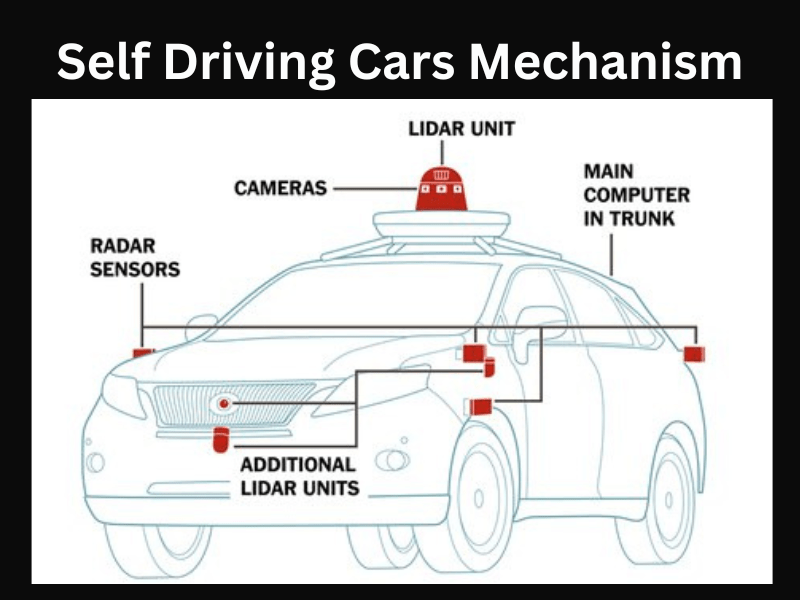

Impacts of Computer Vision on Self-driving cars, which primarily rely on Computer Vision for navigation and real-time decision-making, represent a revolutionary development in the automobile industry. In the context of self-driving automobiles, this case study explores the complex workings of computer vision, highlighting advances, problems, and the potential game-changing effects on transportation in the future.

Objective

The main goal of integrating computer vision into self-driving cars is to allow them to see and understand their environment on their own, recognize any hazards, and make judgments in real time without the need for human interaction. The goal of utilizing cutting-edge algorithms and sensor technology is to improve navigation accuracy, efficiency, and safety. In order to develop a dependable and strong autonomous driving system, computer vision enables self-driving cars to comprehend and react to intricate traffic situations.

The Key Challenges in Achieving Fully Autonomous Vehicles

Strong object detection and classification is a major challenge. Computer vision technologies are excellent at precisely recognizing and classifying different objects in the car’s environment, enabling a preemptive reaction to possible threats.

Moreover, it addresses the difficulty of depth perception, enabling cars to precisely measure distances and navigate through intricate situations. Computer Vision plays a major role in enhancing the reliability and trustworthiness of autonomous vehicles by offering a thorough and up-to-date awareness of the road, which equips them to handle the complexities of the dynamic driving environment. The key challenges in achieving fully autonomous vehicles are:

Sensor technology: Autonomous vehicles require extremely precise and dependable sensors. They must have the ability to identify items in all types of weather and lighting. They must also be able to follow an object’s path across time.

Software development: A group of engineers with experience in computer vision, machine learning, and artificial intelligence is needed to create the highly complicated software for autonomous cars. Real-time processing of sensor data and efficient and safe decision-making are requirements for the program.

Mapping: For safe navigation, autonomous cars require a high-definition map of their surroundings. This map must be current and accurate. It must also be able to take environmental changes like construction zones and traffic incidents into account.

Testing: A wide range of situations must be used for comprehensive testing of autonomous cars. This covers testing in various weather scenarios, on various kinds of roads, and in various traffic situations. To make sure the cars are safe, a thorough testing procedure is required.

Regulatory approval: Autonomous vehicles require regulatory approval before they may be used on public roads. This can be a costly and time-consuming operation.

Role of Artificial Intelligence in Creating Self-Driving Cars

The development of self-driving cars heavily relies on artificial intelligence. To sense their surroundings, make judgments, and steer their vehicle, self-driving cars use a combination of sensors, data processing systems, and artificial intelligence (AI) algorithms. AI gives the vehicle the ability to analyze sensor data, comprehend its environment, and navigate effectively and safely.

Self-driving automobiles use a range of AI systems that use different methodologies. These include control systems for driving vehicles, machine learning algorithms for perception and decision-making, and computer vision algorithms for object detection and recognition.

Self-driving cars can be trained on enormous volumes of data gathered from diverse scenarios and road conditions through machine learning. As a result, the AI algorithms are able to recognize patterns and anticipate outcomes by drawing on analogous scenarios from their training data. The AI systems’ ability to adapt and make wise decisions increases with the amount of data and variety of scenarios they are exposed to.

But even with advances in AI, contemporary self-driving cars still need human supervision and assistance. Unpredictable events, safety issues, and the complexity of real-world driving scenarios make it difficult for AI to manage every scenario on its own. To handle extraordinary conditions and guarantee safety, human assistance is required.

Researchers and engineers are working to improve AI algorithms, gather more data, and increase the vehicle’s perception and decision-making skills in order to boost self-driving capabilities. It is anticipated that continuous developments in AI, sensor technology, and processing capacity will accelerate the development of self-driving automobiles and progressively lessen the need for human intervention.

It is noteworthy that the advancement and implementation of autonomous vehicles necessitate the integration of artificial intelligence (AI) with various engineering fields, in addition to regulatory concerns, in order to guarantee the secure and dependable functioning of these automobiles.

How Do Self-Driving Cars Work

Self Driving Cares have a 99.9% accurate Advanced Driving Assist System (ADAS). This system’s sensors are mounted on the front bumper; when it detects impediments or other vehicles, it applies the brakes and gives the driver guidance. This system performs several tasks. In order to inform drivers of the speed restriction on the road, it can also record the speed limit and display it on the vehicle’s speedometer. Additionally, this system has specialized sensors, such as an automated braking sensor. When a vehicle or impediment approaches, the vehicle automatically applies the brakes.

An autonomous vehicle does not see exactly how a person does. However, the way it perceives objects is pretty close to how the human eye does it. An autonomous vehicle has numerous sensors. Radars, Ladars, RGB-D (Red, Green, Blue, and Depth) cameras, and possibly more, depending on the engineering involved, are available.

Radar: Radio waves are used by radars. The radio waves reverberate (or echo) off the surface of any item they come into contact with. The sensor records these reflected waves in order to create a map. Autonomous vehicles have long employed radars (research work). Radars can detect whether or not an item is ahead of us, but they cannot provide extremely accurate data. Because radars can withstand bad weather, we still utilize them.

Lidar: A Lidar is a radar substitute that operates by using light instead of radio frequencies. It uses the millions of infrared pulses it emits every second to map the area around it. It yields good results and functions well under all lighting circumstances. However, its dependability in all circumstances has not been demonstrated and it is highly costly. Additionally, they don’t offer the same resolution as cameras. It is still sufficient to create a useful map of the area.

RGB-D Camera: Also referred to as a stereo camera, this is a typical camera having two lenses. It functions quite similarly to how our eyes do. Because the camera has two lenses, there are two light rays entering it at any given time. After that, we utilize elementary trigonometry to determine the depth because we already know the angle and separation between two lenses. This camera is used to detect lanes, traffic signals, individuals waving at onlookers, and other objects. This can identify these features fairly well and has a good resolution.

An autonomous vehicle can see in this way. The vehicle can create a map and identify items using this data. Engineers are now using machine learning and computer vision to process this. Though there is a lot of effort involved, in essence, we employ computer vision to identify signs, lanes, and objects. Machine Learning helps with this by informing the vehicle that this collection of pixels is a human, an automobile, a traffic light, etc.

Conclusion

Computer vision serves as the foundational technology for self-driving cars, reshaping our understanding and experience of transportation. At its core, computer vision enables vehicles to interpret and respond to the dynamic environment, acting as the eyes and brains of autonomous systems. This transformative technology empowers self-driving cars to navigate complex road scenarios, identify obstacles, and make real-time decisions with a level of precision and efficiency unmatched by traditional vehicles.

As technological advancements continue, the ongoing refinement of computer vision algorithms and hardware overcomes inherent challenges, contributing to the evolution of autonomous driving. The future promises a safer transportation landscape, where the integration of computer vision ensures a heightened level of safety, efficiency, and autonomy on our roads. With each innovation, we inch closer to a driving experience characterized by reduced accidents, optimized traffic flow, and a seamless integration of technology into the fabric of daily commutes.

Also read: The Power of Computer Vision In AI

FAQ

Computer vision continuously scans the environment to assist the self-driving cars in route planning and real-time decision-making.

The machine learning algorithms receive the patterns found in the data by the neural networks.

Using neural networks and computer vision, a self-driving car can identify lane lines on roads, recognize traffic signals, and avoid frontal collisions in a variety of weather scenarios.

The use of machine learning algorithms helps an automobile gather information about its environment from cameras and other sensors, analyze it, and determine what to do next.